Modifying the Dexmo haptic controller

During my internship at Dexta Robotics, Shenzhen, I had the opportunity to play around with Dexta Robotics’ flagship product, the Dexmo force-feedback exoskeletal glove. Apart from exhibiting the product at SIGGRAPH 2019, I also worked on trying to improve the technology itself. From the website:

Dexmo is a commercialized lightweight, wireless, force feedback glove. It offers the most compelling force feedback experience with both motion capture and force feedback abilities, designed for the use in training, education, medical, gaming, simulation, aerospace and much more. Dexmo is the most easy-to-use force feedback glove, designed for both researchers, enterprises and consumers. Its natural and intuitive interaction enables everyone to seamlessly touch a truly immersive VR world.

Dexmo captures 11 degrees of freedom hand motion. On top of the bending and splitting motion detection on all fingers, it introduces an additional rotation sensor for the thumb, which allows it to capture the hand motion at full dexterity.

Powered by highly customized servo motors, with compact circuit design and advanced control algorithms, Dexmo’s Direct Drive Method offers higher precision, higher torque output and lower latency than other motors of same sizes.

I wanted to add 5 more degrees of freedom to the glove: one additional DoF for each finger, allowing each finger to express full 3D and therefore its full range of motion. The most natural biomechanically-motivated location to add another sensor was at the interphalangeal (IP) joints. The only way to add this functionality without severely modifying the ergonomics of the glove was to add indirect light-based time of flight (ToF) sensors. These sensor measurements allowed more natural grasp motions to be predicted and were integrated into the Dexmo software development kit (SDK) in Unity.

My concept made it into Dexta Robotics’ 2020 US patent Fig. 32:

FIGS. 32A-B show the change in the distance between a knuckle of the user’s finger and the system 210 when the user bends the finger. In the unbent state of the finger, as shown by FIG. 32A, the distance between the knuckle and the system 210 is greater than the distance when the finger is bent, as shown in FIG. 32B. In many embodiments, the distance is measured by a proximity sensor and can be used to calculate rotation of the user’s finger and the system 210, which in turn facilitates motion capture of the finger. The use of a proximity sensor demonstrates yet another difference over the prior art, where many existing force feedback exoskeleton devices use rotational sensor(s). The proximity sensor may use infrared sensing, electromagnetic fields, a beam of electromagnetic radiation emitting and sensing, optical/depth sensing, or any other suitable sensing method.

For all Unity and Arduino code please see the repo. Demo video here.

Introduction

By adding an additional sensor on each finger, the SDK can differentiate between grasps with different interphalangeal (IP) joint positions, for example holding a book or holding a cylinder.

Virtual fingers currently only follow one given trajectory with Dexmo, the power grasp (grasping a cylinder). Adding an additional sensor lets Dexmo independently measure finger PIP joints.

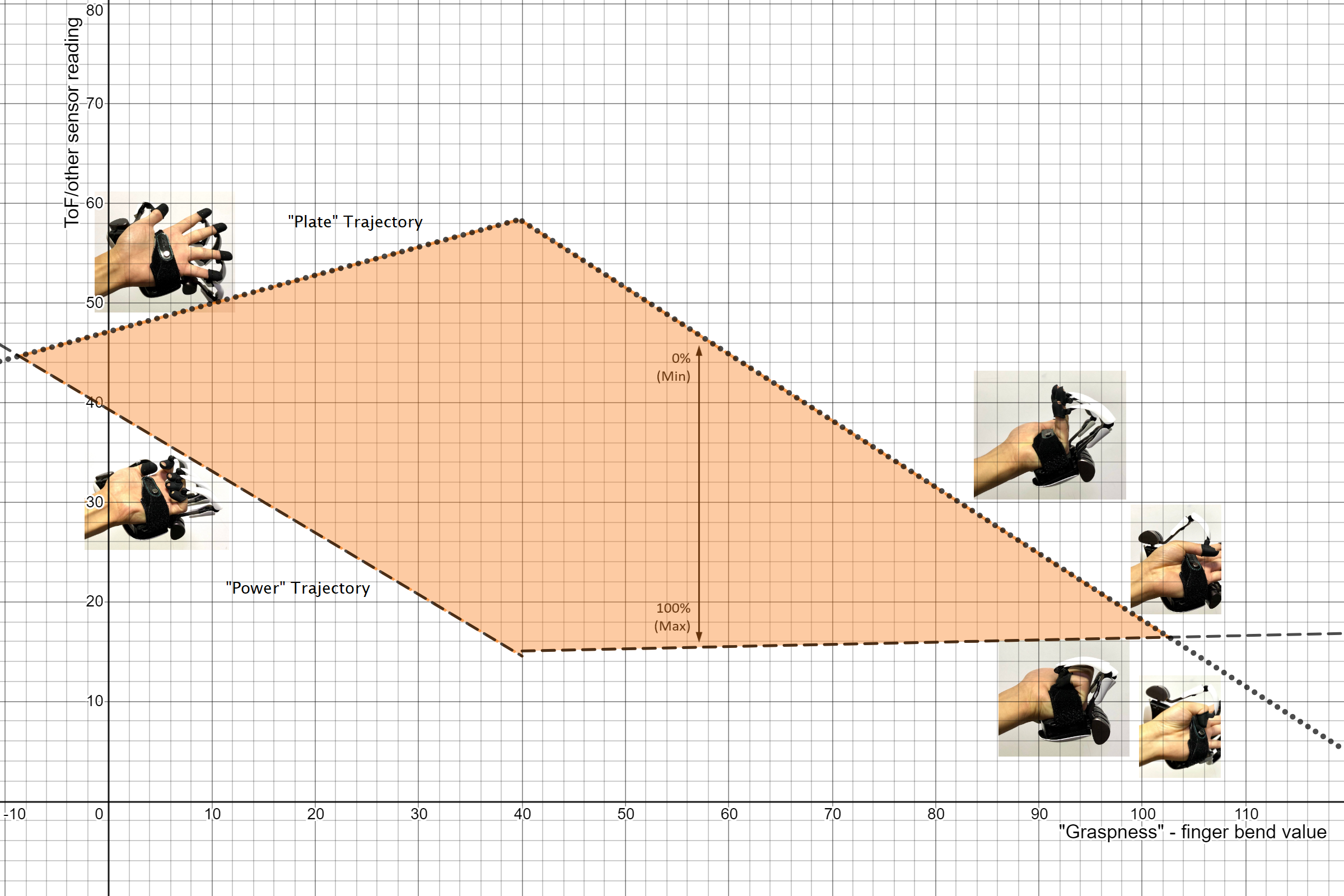

Now any grasp can be expressed as a percentage between the “power” grasp (IP joints fully bent) and “plate” grasp (IP joints fully unbent):

Plate trajectory (minimum):

Power trajectory (maximum):

The thumb’s movements are learnt separately but the idea is the same:

Thumb Plate (IP joint completely straight)

Thumb Power (IP joint completely bent)

Therefore for any given ToF reading and finger bend value, a grasp can be predicted between the minimum and maximum trajectories:

The curves representing the maximum and minimum trajectories are obtained from the regression algorithm.

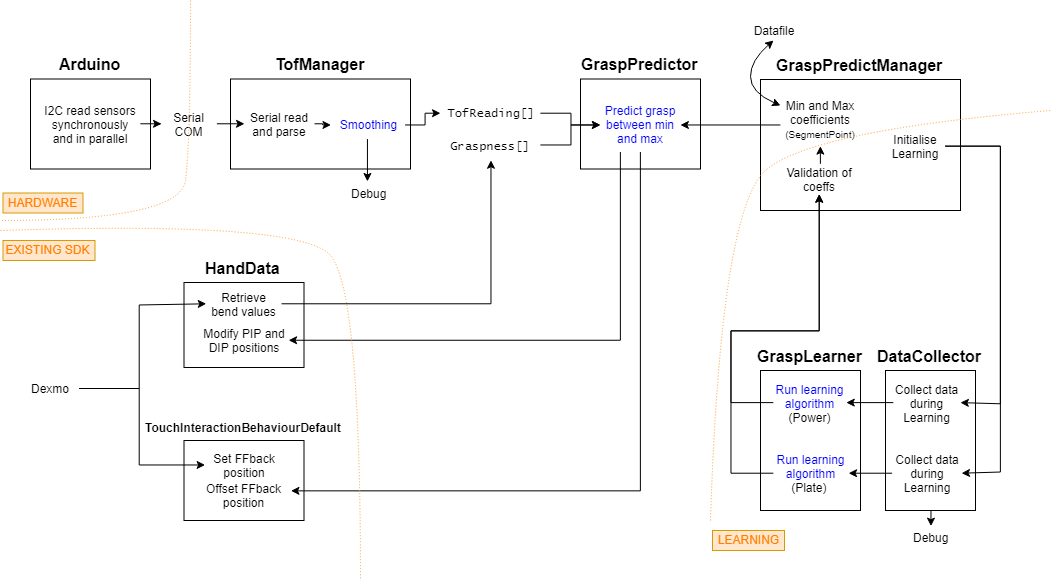

Code overview

Note that the sensor data processing is completely decoupled, so any sensor can be used instead of ToF, and its sensor data output to array, like TofManager.TofReading .

-

double[][][] GraspPredictManager.GraspTofCoeffsMaxstores Power (MAX) trajectory coefficients as array of 5 fingers, 2 segmented regressions, and polynomialorder+1 coeffs double[][][] GraspPredictManager.GraspTofCoeffsMinstores Plate (MIN) trajectory coefficients as array of 5 fingers, 2 segmented regressions, and polynomialorder+1 coeffsfloat[] TofManager.TofReadingstores the continuously updated smoothed ToF sensor readingsfloat[] TofManager.Graspnessstores the continuously updated finger bend value readingsFingerData.MyGraspPredictor.PredictGrasp(float)returns grasp prediction between plate (min, returns 0) and power (max, returns 100) for given graspness value and according to current ToF reading

Demo

- Plug in Arduino to PC and connect Dexmo.

- Check Arduino found all sensors in Arduino IDE (see Hardware).

- In Demo game, press

Ato read previously learnt calibration coefficients from file. - Learning Press

Cto clear loaded coefficients to start learning new. This is important so the current coeffs don’t affect the new ones as they are learnt. There are 4 learning procedures in total:- Press

Jto initialise learning data collection for power grasp for fingers (keep IP joints fully bent)

- Press

Kto initialise learning data collection for plate grasp for fingers (keep IP joints fully straight)

- Press

Nto initialise learning data collection for power grasp for thumb (keep IP joint fully bent)

- Press

Mto initialise learning data collection for plate grasp for thumb (keep IP joint fully straight)

- Press

- Press

Sto save coefficients to file

Technical deep dive

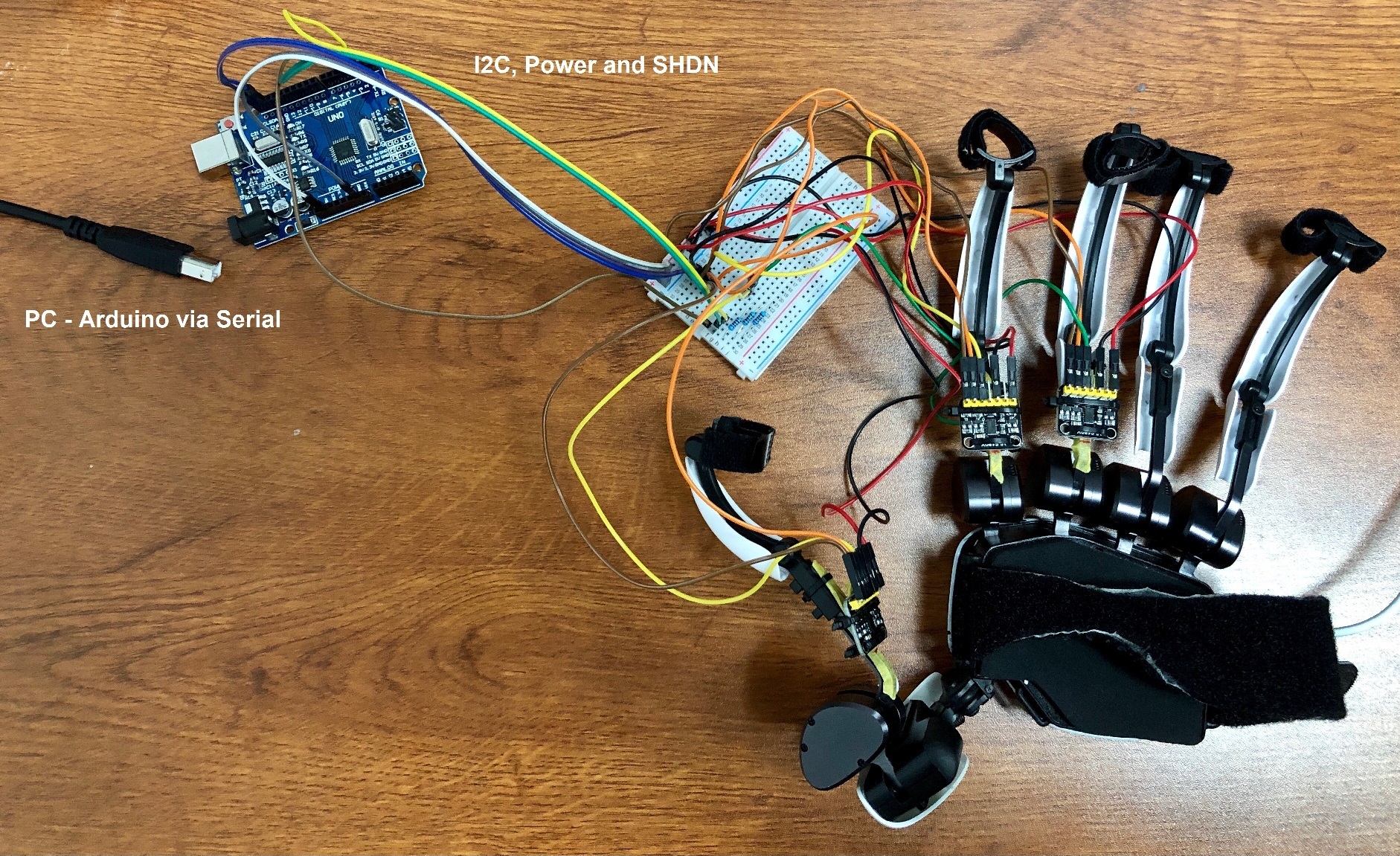

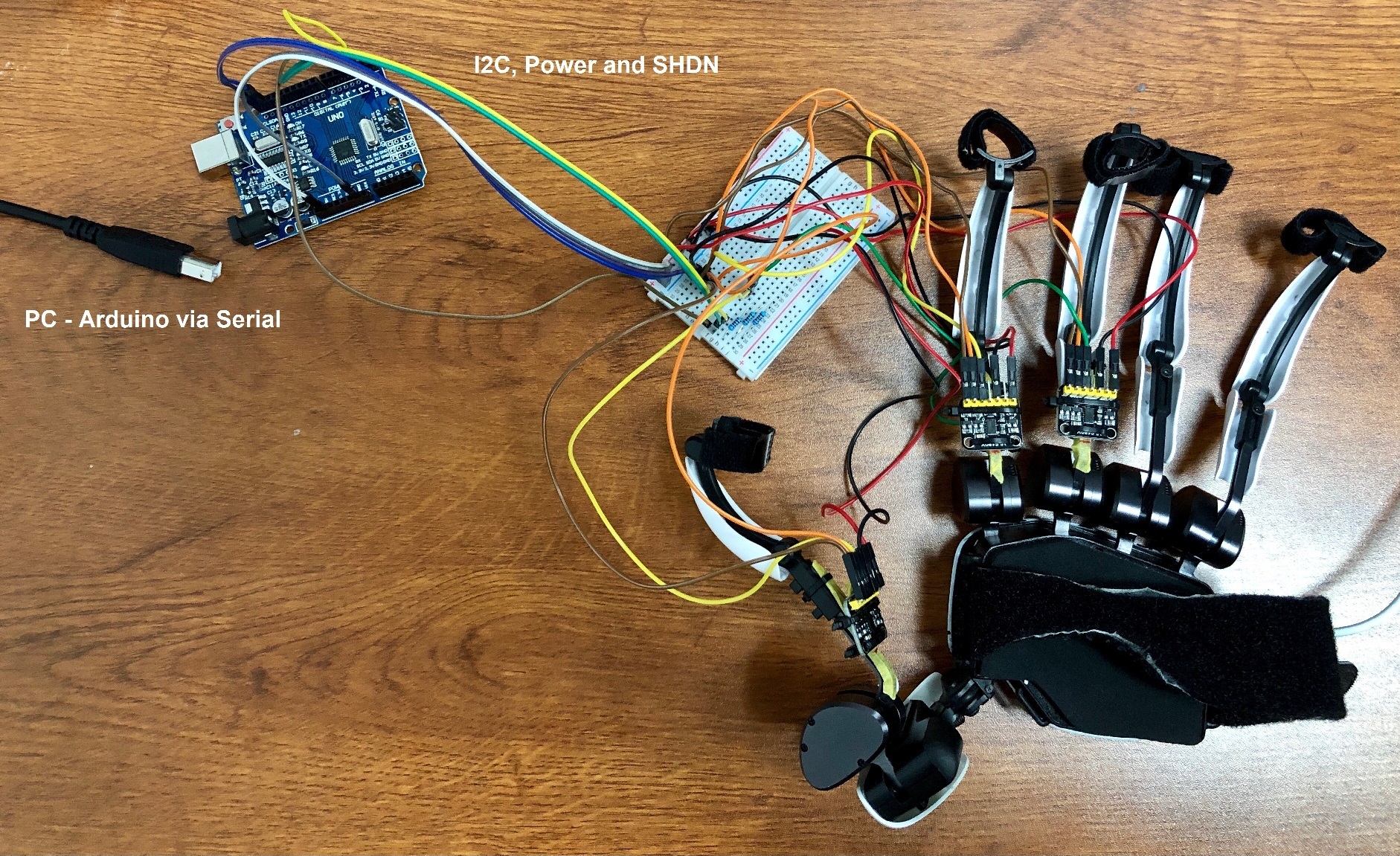

Sensor hardware

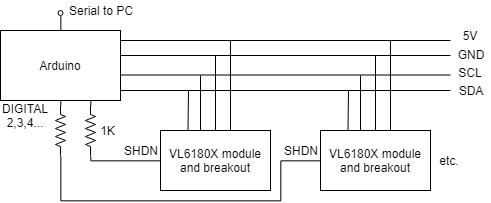

This project uses sensor boards which are based on the VL6180X module.

An Arduino controls the sensors and is connected to PC via serial, default baud rate 115200.

The sensors communicate with I2C and take 2.8V supply, and the board has a level shifter which can take Arduino 5V or 3V3. The sensors connect directly into the same SCL and SDA pins on Arduino and each sensor SHDN (shutdown) pin is connected to an Arduino digital out pin.

The TofSensorArduino.ino script runs on Arduino, which sets sensors up on reset and writes to Serial. The sensor settings and functions are implemented in VL6180.cpp. This is based off the examples shipped with the sensor (password dekp).

On reset:

- All sensors are shutdown via SHDN pin

- Sensor individually booted and set to a different I2C address.

- If an error occurs, ConnectionError will be output.

In the loop:

- Sensors single-shot ranging is commenced on each sensor

- Each sensor is polled until all readings are ready.

- Readings output together as a comma separated line:

range0,range1,range2,range3,range4

- If error encountered after single-shot ranging, this is non-fatal (i.e. error due to no target detected) and a cache value is output instead.

ConnectionError at I2C intended address

- Reset Arduino via hardware

- Check SHDN pin is pulled high after connection attempted.

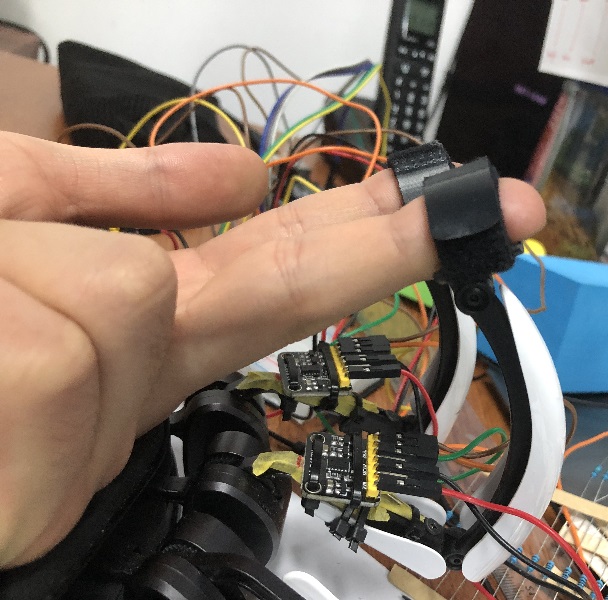

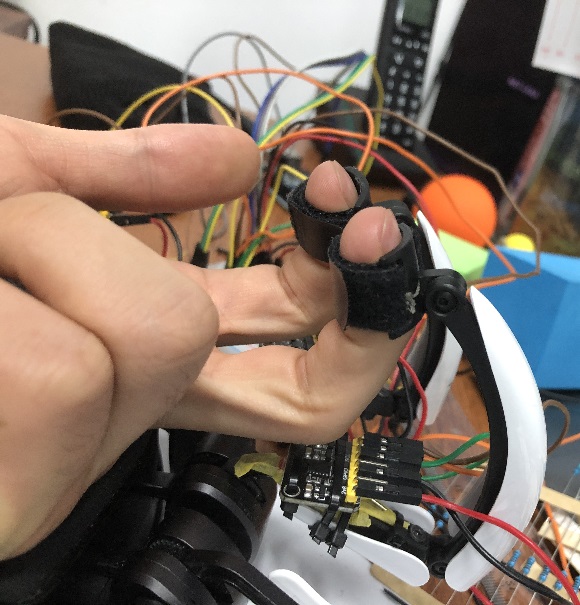

ToF Sensor placement

Needs to detect difference between bent PIP and straight PIP for most finger bend values. Note the difference in distances between the ToF sensor and the finger PIP joint positions in the following situations:

Plate grasp: finger PIP far away from ToF sensor

Power grasp: finger PIP closer to ToF sensor

Data processing and inference

Description of Grasp Prediction algorithm

GraspPredictor.PredictGrasp

- Retrieve sensor reading from

TofManager.TofReading - Retrieve max and min coefficients for correct finger and segment (whether graspness < or > segment point) from

GraspPredictManager.GraspTofCoeffsMax[finger][segmentIndex]andGraspPredictManager.GraspTofCoeffsMin[finger][segmentIndex] - From the polynomials given by the coefficients, calculate

f_max(graspness)andf_min(graspness) - Find ToF reading as linear percentage between min and max

- Remap the min-max range for more realistic model

- Clamp value so can’t get unachievable hand grasps

Description of Learning/Regression algorithm

GraspPredictManager.Update and GraspLearner.LearnGrasp

-

Data collected into

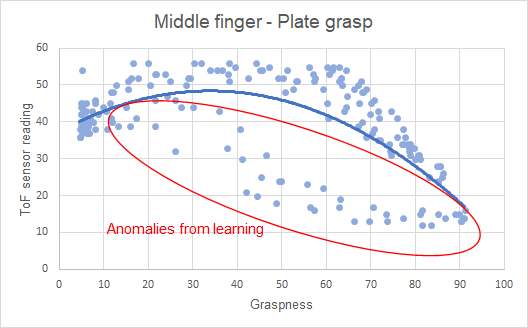

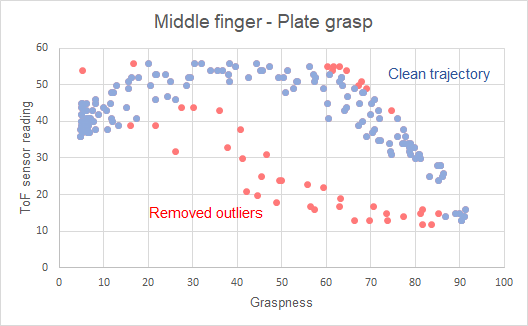

DataCollectoras grasp trajectory is performed, giving data of sensor reading against bend value. There may be anomalies present (away from main grasp trajectory):

-

Outliers removed by applying quadratic regression on entire dataset and removing points > 2*standard deviation away from regression, and repeat, to obtain a clean trajectory on the graph.

-

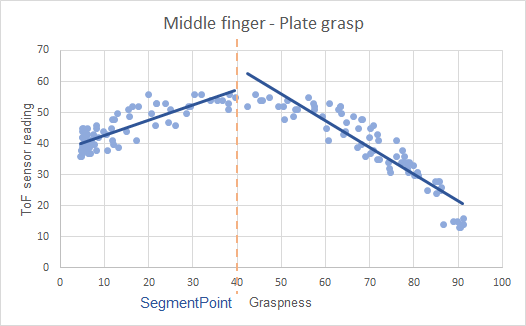

The data is segmented into two halves from

XXX_SegmentPointand linear regression applied on each segment. The coefficients are stored inGraspPredictManager.GraspTofCoeffsXXX[finger][segmentIndex]depending on the current grasp being learned.

-

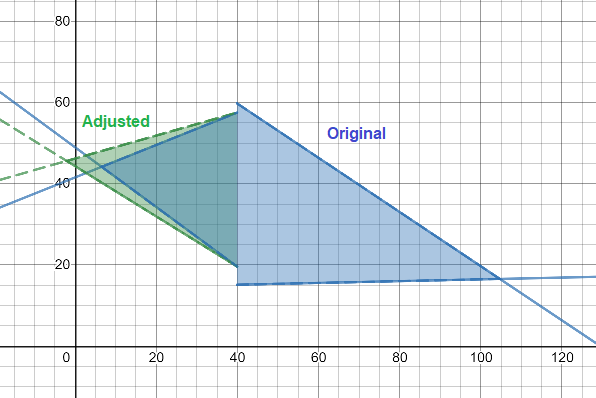

Once both Power and Plate have been learned,

GraspPredictManagervalidation algorthm adjusts the coefficients so that the curves only intersect <0 and >100 so that the prediction space is always valid.

Description of ToF sensor smoothing algorithm

TofManager._cacheReturnFilteredReadings

- Parse comma separated data strings of sensors’ values from serial buffer (see Hardware) into array.

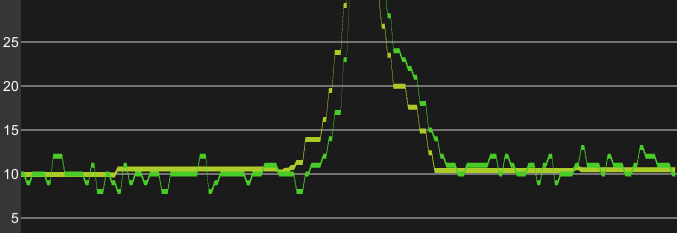

- For each finger, apply exponential recursive filter with new reading and previous cache value:

filtered = k * previous + (1-k) * newwherekis a constant. Note a higherkmeans a more stable value but with a bigger lag between the raw and output data. - Remove glitches (random fluctuations)

- Because the noisy signal’s mean is assumed to be the true value,

tofMovementstracks each each positive and negative fluctuation step and is centred around zero. For small fluctuations the signal is assumed static and the signal is anchored to a cache value. - If the fluctuation step is too large, or the absolute

tofMovementsvalue exceeds a threshold in any direction, the signal is assumed to have started properly changing, and the anchor is released until the signal fluctuates in the other direction again.

- Because the noisy signal’s mean is assumed to be the true value,

- Output to

TofManager.TofReading

ToF data before (green) and after (yellow) filter and glitch removal

SDK documentation

Static Classes

TofManagerreads ToF sensor data from serial and updates smoothed values to a public array. Any sensor can be used in this way, instead of the ToF sensor, for example rotation sensors on Dexmo or other distance sensors.GraspPredictManagerhandles events for reading and writing the regression coefficients associated with the different grasp trajectories. This class also manages the learning of the maximum and minimum grasp trajectories.TofRegressionprovides functions for regression and regression prediction.DebugToolscontains some tools for showing graphs and writing to Excel files.

Classes

GraspPredictorserves the purpose of predicting the grasp posture between the maximum (power grasp) and minimum (plate grasp). Unlike the other Dexmo sensors, this maximum and minimum depends on the current bend value.GraspLearneris created byGraspPredictManagerto handle the data collection and regression for one finger and one grasp trajectory.DataCollectoris a container for the sensor (TofReading) and bend value (Graspness) values collected during learning.

Additions to existing SDK

HandData.Parse

Additions get each finger’s bend value, stores in TofManager.Graspness, retrieves grasp prediction based on this and modifies the bend values for each joint to be outputted. PIP and DIP are now directly updated by graspPrediction and MCP is offset with a scaled value (try switching from plate and power with Dexmo whilst maintaining the same finger bend value).

for (int i = 0; i < _rawHandData.Fingers.Length; ++i)

{

float _graspness = 100f * _rawHandData.GetJointBendData(i, 0);

TofManager.Graspness[i] = _graspness;

float _graspPrediction = fingerDatas[i].MyGraspPredictor.PredictGrasp(_graspness);

float _MCPAdjust = Mathf.Clamp01((1f-_graspPrediction) * 0.2f);

for (int j = 0; j < _rawHandData.Fingers[i].Joints.Length; ++j)

{

float _bendness = _rawHandData.GetJointBendData(i, j);

fingerDatas[i].JointDatas[j].BendValue = _bendness;

if (_graspness < 8f) break;

switch ((JointType)j)

{

case (JointType.MCP):

_bendness += _MCPAdjust;

break;

case (JointType.PIP):

if (_graspness > 8f) // Disallow IP change with small graspness as too unreliable (for now)

{

if (i == (int)FingerType.THUMB)

{

_bendness += _MCPAdjust;

}

else

{

_bendness = _graspPrediction;

}

}

break;

case (JointType.DIP):

if (_graspness > 8f) // Disallow IP change with small graspness as too unreliable (for now)

_bendness = _graspPrediction;

break;

}

fingerDatas[i].JointDatas[j].BendValue = _bendness;

}

TouchInteractionBehaviourDefault.OnInteractionStay

Additions get each finger’s bend value from FingerData, retrieves grasp prediction based on this and offsets the force feedback position so the contact looks more realistic.

if (_touchTarget.EnableForceFeedback)

{

float _forceFeedBackBendValue = _fingerData.FingerDataOnSurface[JointType.MCP].BendValue + (_data.Value.IsInward ? forceFeedbackPositionOffset : -forceFeedbackPositionOffset);

float _graspPrediction = _fingerData.FingerDataOnSurface.MyGraspPredictor.PredictGrasp(100f * _fingerData.FingerDataOnSurface[JointType.MCP].BendValue);

_forceFeedBackBendValue -= Mathf.Clamp01((1f-(_graspPrediction)) * 0.2f);

...

_forceFeedBackData.Update(_forceFeedBackBendValue, _touchTarget.Stiffness, _data.Value.IsInward);

...

}

}

-

FingerData.FingerData(all constructors)MyGraspPredictor = new GraspPredictor(_fingerData.Type);MyGraspPredictor = new GraspPredictor(_type); or -

DexmoDatabase.asset

Setting of TuringValue and TuringPoint to 1 for PIP joints and 0 for DIP joints, as now these bend values are directly set by the grasp prediction.